What is a robots.txt file? What is its role in your website? How to setup like this, and what can be done with a robots.txt file? Where is it located?

Let’s all know in this article. We are talking about robots.txt. Search engines like google or bing use a type of program that collects the necessary information by going to the website on the Internet and keeps moving from one website to another. These are called Web Crawlers, Bots, and Spiders Robots.

In the early days of the Internet, computing power and memory were very costly. Some website owners got very upset with the crawlers of the search engines of our time because then the website was less and these crawlers or robots used to visit the website repeatedly. Due to this, they cannot entertain their real users because website resources are already finished.

To deal with this problem, some people gave the idea of robots.txt. Which will instruct robots that the website owner is providing permission to crawl the specific folder of the website and not giving consent to that folder and to whom?

What is a robots.txt file?

Robots.txt is a text file placed in the website’s root folder. Let’s take the example of xyz.com. When a search engine’s bot visits a website, it first checks the xyz.com/robots.txt, and If the file is not found, then there is no issue; the robot will crawl the entire website and index every part of the website according to its own pace and store the information. If it finds a file on xyz.com/robots.txt, it reads it and technically follows it, but in the real world, data aggregators or email-gathering bots will defy all bots’ instructions created by hackers.

Here are some characteristics which become transparent robots.txt files for any website.

Number 1: These files are always text files and have extension .txt. It can be created with any word editor like notepad, notepad++ or any other.

Number 2: robot.txt is always in the website’s main root folder, not inside any folder.

Number 3: its name is always robots.txt. It cannot be robot.txt or cannot be capitalised and is always case-sensitive.

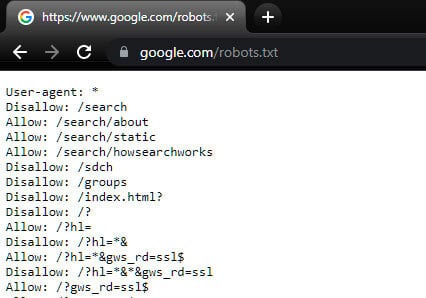

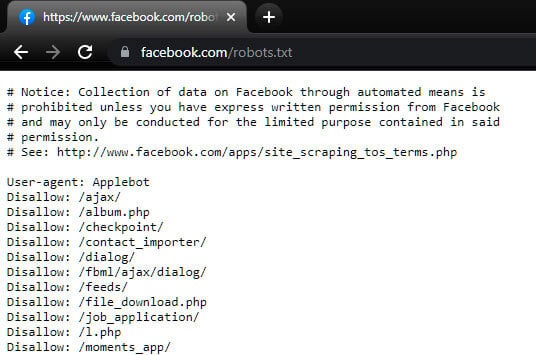

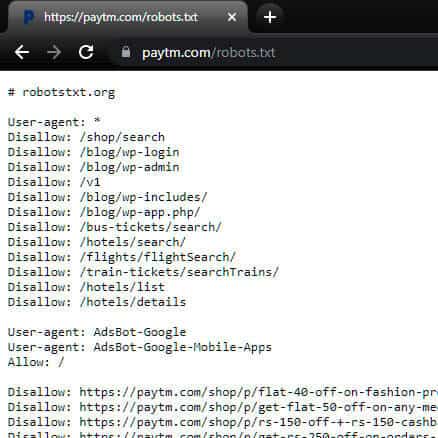

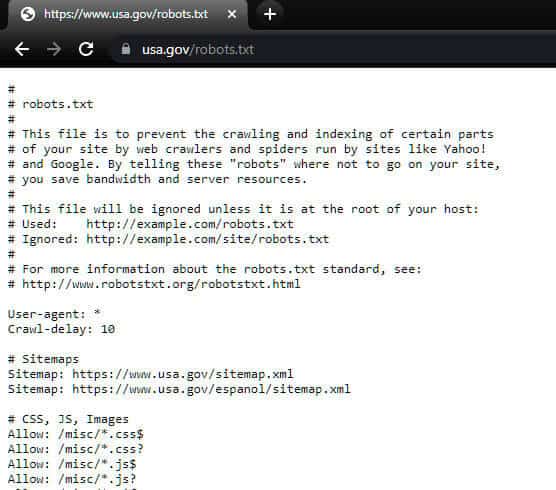

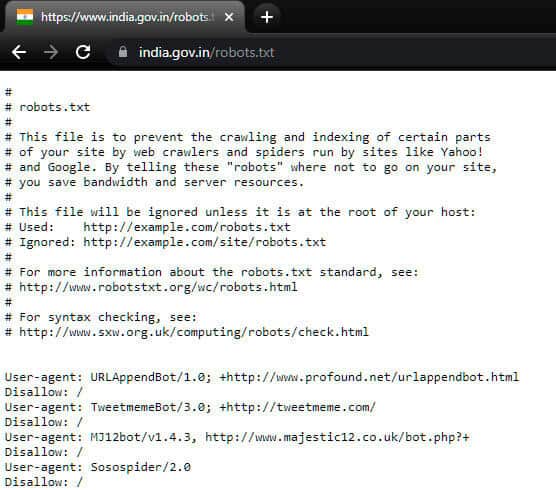

Number 4: you can see the robots.txt file of any website by applying robots.txt to any domain by adding /robots.txt at the end of the domain.

Number 5: there is no guarantee that a robot will accept the instructions in this file by a bot or not. Significant search engine companies like Google, Yahoo, and Yandex follow these instructions, but small search engines and data aggregators never follow these instructions.

- These are always text files.

- Always in the root folder

- Always name robots.txt

- See the robots.txt file by adding /robots.txt at the end of any domain

- Search bots are bound to follow it.

How to create robots.txt?

So a lot of history has happened. Now let’s see what happens in this file. This is the minimum content of the robots.txt file. That’s it!

User-agent: *

Disallow:If you want to allow all search engine robots to access all pages of your website, then only this should be in your robots.txt file, which is the first line of a file user-agent semicolon asterisk star means that all search engine bots. There are instructions for standard web technologies asterisk star mean wild card, which means everything. After this, nothing in the second line, ‘disallow:’, means that no part of the website is disallowed/banned for any robot.

If it is forwarded slash, then it means that all files of any root directory are disallowed. Remember, we can only, after applying a forward slash at the end of the domain of the website, we can create a link to a page. Even the homepage ends with /index.html or index.php, but the browser will not show it; that is different. If you disallow forward slash, you block all your website files to search engines. If you want to stop only a particular search engine on your website, then the first line of this file is user-agent: * can give its name instead of search engine bot. And then, you can write your instruction below the user-agent name. All major search engines are different, like Google’s Google bot, Yahoo’s Slurp, and Microsoft’s msnbot. If you want to see the list of all user agents, here is the link.

Robots.txt file in SEO

Now the question comes of how robots.txt affect your SEO; what is the advantage? Currently, in the USA, Google handles more than 98% of the web traffic to Google’s website. Google does a crawl budget for every website and decides how many times Google’s robot visits your website. This crawl budget depends on two things:

Number 1. Your server is not getting slow while crawling, and it doesn’t happen that when Google’s robot visits the website, your website is slow for the actual visitors of that time website. Let it happen, and

Number 2. How popular your website is, on more popular websites with more content, Google wants to visit them quickly to update itself with the content. If you wish to use your website well, then you can manage your website with robots.txt. You can block unimportant pages of your website like the login page, folder page with documents of interviews, and pages with old duplicate content. You can save your crawl budget for essential pages by disallowing all these for the Google bot.

Robots.txt You can temporarily disallow indexing your website’s under-maintenance pages. If there is something on your website that is only for your company’s employees, which you do not want to see publicly, you can also use that robot text file. Let us assume that your website is xyz.com, and there is a folder name sample, and it has a page sample.html use this code in your robotx.txt file to hide the folder file.

User-agent: *

Disallow: /sampleApart from this, you can also give a link to the sitemap of your website to search robots from the robot.txt file, for which you will only have to add this line.

Sitemap: https://technopro360.com/sitemap.xmlA while ago, we talked about how the website can be slow for regular visitors due to the crawling of bots of search engines. If your website attracts massive traffic, this delay can be costly. For this, you can put a delay timer in your robots.txt file. After calling a page, search engine robots will wait for a while before crawling the second page, stay time or crawl – delay by default measures in milliseconds the primary fix. For this, you have to give this code in your robot.txt.

Crawl-delay: 10Whatever crawl delay you want to give, in this example, ten means that after calling one page, the crawler will wait ten milliseconds to go back and forth to another page so that your server will get breathing room and your website will not be slow.

Recently, on 1st July, Google also announced that they would stop the ‘noindex’ request covered present in the robot file and on this, Microsoft bing reacted that they will never follow this.

Noindex is different from disallow. Disallow instructs to crawl to refuse, and ‘noindex’ does not refuse to crawl but refuses to index. Noindex had yet to write a rule, but Google was following it in approximately 90% of cases primary, but web admins have to put a noindex tag on their page; maybe a password protector noindex request in robots.txt will not work. Here are some other alternative ways.

Before we finish this article, let’s see the robot.txt file of some standard websites, this is the robot file of facebook.com, a warning is present in the first line itself, and there is a long list. Then it is of google.com’s Robot File, and Paytm Total 5626 Lines Available. Someone needs technical maintenance. Hmm!

By the way, the Indian government and the USA government’s robots.txt have the exact instructions initially, word to word, character to character; all are the same, interesting!

This will help you maintain the robots.txt file of your website and keep helping to support the same.